- What Is Container Security?

-

Managing Permissions with Kubernetes RBAC

- Kubernetes RBAC Defined

- Why Is RBAC Important for Kubernetes Security?

- RBAC Roles and Permissions in Kubernetes

- How Kubernetes RBAC Works

- The Role of RBAC in Kubernetes Authorization

- Common RBAC Permissions Risks and Vulnerabilities

- Kubernetes RBAC Best Practices and Recommendations

- Kubernetes and RBAC FAQ

- Kubernetes: How to Implement AI-Powered Security

- What Is Container Runtime Security?

- What Is Kubernetes Security?

-

Multicloud Management with Al and Kubernetes

- Multicloud Kubernetes Defined

- How Does Kubernetes Facilitate Multicloud Management?

- Multicloud Management Using AI and Kubernetes

- Key AI and Kubernetes Capabilities

- Strategic Planning for Multicloud Management

- Steps to Manage Multiple Cloud Environments with AI and Kubernetes

- Multicloud Management Challenges

- Kubernetes Multicloud Management with AI FAQs

-

What Is Kubernetes?

- Kubernetes Explained

- Kubernetes Architecture

- Nodes: The Foundation

- Clusters

- Pods: The Basic Units of Deployment

- Kubelet

- Services: Networking in Kubernetes

- Volumes: Handling Persistent Storage

- Deployments in Kubernetes

- Kubernetes Automation and Capabilities

- Benefits of Kubernetes

- Kubernetes Vs. Docker

- Kubernetes FAQs

-

What Is Kubernetes Security Posture Management (KSPM)?

- Kubernetes Security Posture Management Explained

- What Is the Importance of KSPM?

- KSPM & the Four Cs

- Vulnerabilities Addressed with Kubernetes Security Posture Management

- How Does Kubernetes Security Posture Management Work?

- What Are the Key Components and Functions of an Effective KSPM Solution?

- KSPM Vs. CSPM

- Best Practices for KSPM

- KSPM Use Cases

- Kubernetes Security Posture Management (KSPM) FAQs

- What Is Orchestration Security?

-

How to Secure Kubernetes Secrets and Sensitive Data

- Kubernetes Secrets Explained

- Importance of Securing Kubernetes Secrets

- How Kubernetes Secrets Work

- How Do You Store Sensitive Data in Kubernetes?

- How Do You Secure Secrets in Kubernetes?

- Challenges in Securing Kubernetes Secrets

- What Are the Best Practices to Make Kubernetes Secrets More Secure?

- What Tools Are Available to Secure Secrets in Kubernetes?

- Kubernetes Secrets FAQ

-

Kubernetes and Infrastructure as Code

- Infrastructure as Code in the Kubernetes Environment

- Understanding IaC

- IaC Security Is Key

- Kubernetes Host Infrastructure Security

- IAM Security for Kubernetes Clusters

- Container Registry and IaC Security

- Avoid Pulling “Latest” Container Images

- Avoid Privileged Containers and Escalation

- Isolate Pods at the Network Level

- Encrypt Internal Traffic

- Specifying Resource Limits

- Avoiding the Default Namespace

- Enable Audit Logging

- Securing Open-Source Kubernetes Components

- Kubernetes Security Across the DevOps Lifecycle

- Kubernetes and Infrastructure as Code FAQs

- What Is the Difference Between Dockers and Kubernetes?

- Securing Your Kubernetes Cluster: Kubernetes Best Practices and Strategies

-

What Is a Host Operating System (OS)?

- The Host Operating System (OS) Explained

- Host OS Selection

- Host OS Security

- Implement Industry-Standard Security Benchmarks

- Container Escape

- System-Level Security Features

- Patch Management and Vulnerability Management

- File System and Storage Security

- Host-Level Firewall Configuration and Security

- Logging, Monitoring, and Auditing

- Host OS Security FAQs

- What Is Docker?

- What Is Container Registry Security?

- What Is a Container?

- What Is Containerization?

What Is Container Orchestration?

Container orchestration is a technology that automates the deployment, management, and scaling of containerized applications. It simplifies the complex tasks of managing large numbers of containers. Container orchestrators, such as Kubernetes, ensure that these containers interact efficiently across different servers and environments. Orchestrators provide a framework for managing container lifecycles, facilitating service discovery, and maintaining high availability. For microservices architectures, where cloud-native applications consist of numerous interdependent components, this framework is foundational.

By leveraging container orchestration, DevOps teams can streamline provisioning, resource allocation, and scaling, enabling them to fully harness the potential of containerization and align it with their business objectives.

Container Orchestration Explained

Container orchestration automates the deployment, management, and scaling of containerized applications. Enterprises leverage orchestrators to control and coordinate massive numbers of containers, ensuring they interact efficiently across different servers.

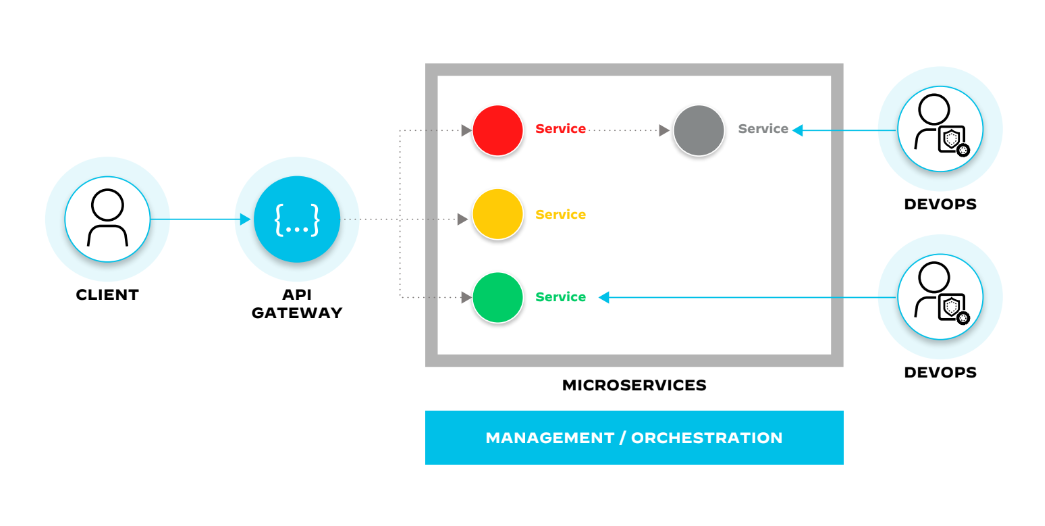

Figure 1: Orchestration layer deploying new versions of microservices, scaling microservices to meet demand, and monitoring microservices for performance and health

Orchestrators like Kubernetes manage lifecycles, facilitate service discovery, and maintain high availability. They enable containers to function in concert, which is essential for microservices architectures where cloud-native applications consist of numerous interdependent components.

The container orchestration market is in fact keeping pace with the adoption of microservices architectures, cloud-native applications, and containerization. Calculated at US$745.72 million in 2022 — up 26% in a five-year span — the market size continues to climb and is expected to reach US$1084.79 billion by 2029.

Orchestration Tools

Orchestration tools provide a framework for automating container workloads, enabling DevOps teams to manage the lifecycles of their containers. These systems, or orchestration engines, facilitate advanced networking capabilities, streamlining the provisioning of containers and microservices while adjusting resources to meet demand. With orchestrators, DevOps teams can wield the full potential of containerization, aligning it with their business objectives.

Popular Container Orchestration Engines

- Kubernetes® (K8s)

- Rancher

- SUSE Rancher

- Amazon Elastic Kubernetes Services (EKS)

- Azure Kubernetes Services (AKS)

- Google Kubernetes Engine (GKE) / Anthos

- Red Hat OpenShift Container Platform (OCP)

- Mesos

- Cisco Container Platform (CCP)

- Oracle Container Engine for Kubernetes (OKE)

- Ericsson Container Cloud (ECC)

- Docker Swarm

- HashiCorp Nomad

The advantage of orchestration engines comes from the declarative model they typically employ, which effectively combines the benefits of infrastructure as a service (IaaS) and platform as a service (PaaS).

- IaaS provides the granular control and automation that allows developers to manage the underlying infrastructure, such as servers, storage, and networking. This gives developers the flexibility to customize their deployments to meet specific needs.

- PaaS provides a higher level of abstraction that allows developers to focus on their applications without having to worry about the underlying infrastructure. PaaS makes it easier for engineers to deploy and manage their applications, but it also provides them less control over the infrastructure.

Drawing from the best from both worlds, orchestrators offer developers power and flexibility, as well as a consistent operational experience across environments, from physical machines and on-premises data centers to virtual deployments and cloud-based systems.

Core features of prominent orchestration engines include scheduling, resource management, service discovery, health checks, autoscaling, and managing updates and upgrades.

Key Components of Orchestrators

The terminology for container orchestration components varies across tools currently on the market. The underlying concepts and functionalities, though, remain relatively consistent, though. Table 3 provides a comparative overview of main components with corresponding terminology for popular container orchestrators. For our purposes, to introduce a sense of orchestration mechanics, we’ll use Kubernetes terms.

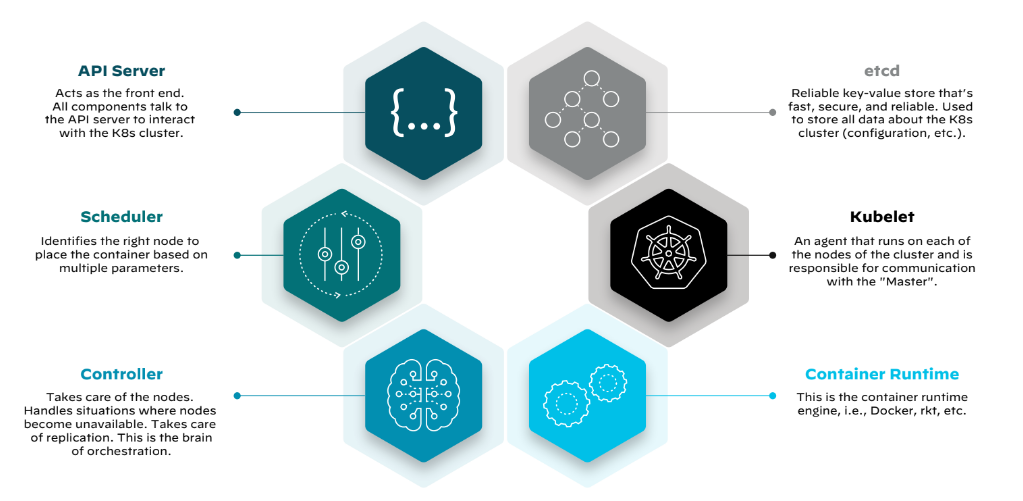

Figure 2: Container orchestration component overview

Orchestration engines like Kubernetes are complex, consisting of several key technological components that work in unison to manage the lifecycle of containers. By understanding key components, you gain an understanding of how to best utilize containerization technologies.

The Control Plane

At the heart of Kubernetes lies its control plane, the command center for scheduling and managing the application lifecycle. The control plane exposes the Kubernetes API, orchestrates deployments, and directs communication throughout the system. It also monitors container health and manages the cluster, ensuring that container images are readily available from a registry for deployment.

The Kubernetes control plane comprises several components — the etcd, the API server, the scheduler, and the controller-manager.

Etcd

The etcd datastore, developed by CoreOS and later acquired by Red Hat, is a distributed key-value store that holds the cluster's configuration data. It informs the orchestrator's actions to maintain the desired application state, as defined by a declarative policy. This policy outlines the optimal environment for an application, guiding the orchestrator in managing properties like instance count, storage needs, and resource allocation.

API Server

The Kubernetes API server plays a pivotal role, exposing the cluster's capabilities via a RESTful interface. It processes requests, validates them, and updates the state of the cluster based on instructions received. This mechanism allows for dynamic configuration and management of workloads and resources.

Scheduler

The scheduler in Kubernetes assigns workloads to worker nodes based on resource availability and other constraints, such as quality of service and affinity rules. The scheduler ensures that the distribution of workloads remains optimized for the cluster's current state and resource configuration.

Controller-Manager

The controller-manager maintains the desired state of applications. It operates through controllers, control loops that monitor the cluster's shared state and make adjustments to align the current state with the desired state. These controllers ensure the stability of nodes and pods, responding to changes in the cluster's health to maintain operational consistency.

| Container Orchestrator | Control Plane Components | Worker Node Components | Deployment Unit | Service |

| Kubernetes | API Server, Scheduler, Controller Manager, etcd | kubelet | Pod | Service |

| Docker Swarm | Manager | Worker | Service | Stack |

| Nomad | Server | Agent | Job | Allocation |

| Mesos | Master | Agent | Task | Job |

| OpenShift | Console, Controller Manager, etcd | Node | Pod | Service |

| Amazon Elastic Container Service (ECS) | Cluster Controller | EC2 instances | Task | Service |

| Google Kubernetes Engine (GKE) | Control Plane | Nodes | Pod | Service |

| Azure Kubernetes Service (AKS) | Control Plane | Nodes | Pod | Service |

Table 1: Common components across various orchestration engines.

Orchestration and Immutable Infrastructure

In contrast with traditional servers and virtual machines, the immutable paradigm that containers and their infrastructure inhabit makes modifications post-deployment nonexistent. Instead, updates or fixes are applied by deploying new containers or servers from a common image with the necessary changes.

Immutable infrastructure's inherent programmability enables automation. Infrastructure as code (IaC) stands out as a hallmark of modern infrastructure, permitting applications to programmatically provision, configure, and manage the required infrastructure. The combined power of container orchestration, immutable infrastructure, and IaC-driven automation delivers unmatched flexibility and scalability.

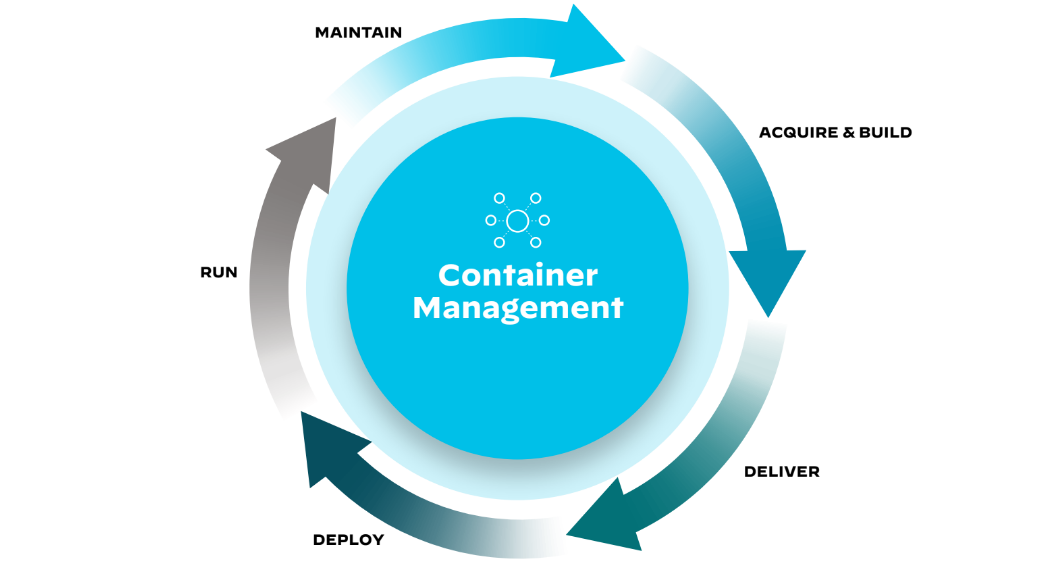

Container Orchestration and the Pipeline

Propelled by the twin engines of containerization and DevOps, container orchestration brings speed and scalability together to underwrite today's dynamic and demanding production pipeline.

Figure 3: CI/CD pipeline application development lifecycle

Acquire and Build Pipeline Phase

In the acquire and build stage, developers pull code from version control repositories, initiating the build process. Automated tools compile the source code into binary artifacts poised for deployment using a tool like Docker or BuildKit. Once the container image is built, it’s stored in a registry such as Docker Hub or Google Artifact Registry.

The acquire and build phase promotes consistent construction of the application, with scripts managing dependencies and running preliminary tests. The outcome is a dependable build that, when integrated with the main branch, triggers further automated processes.

Run Phase

As the build phase concludes, the pipeline executes the code in a controlled environment. Running the container image in a staging environment can be done using a container orchestration tool such as Kubernetes. This crucial step involves the team conducting a series of automated tests to validate the application's functionality. Developers actively seek out and address bugs, ensuring the progression of only high-quality code through the pipeline.

Delivery Phase

In the delivery stage of the CI/CD pipeline, teams automate the journey of new code from repository to production readiness. Every commit initiates a sequence of rigorous automated tests and quality checks, ensuring that only well-vetted code reaches the staging environment. Here, the software undergoes additional, often client-facing, validations. The process encapsulates the build's promotion through environments, each serving as a proving ground for stability and performance. The team's commitment to the delivery phase ensures that the software embodies the best of current development efforts.

Deploy Phase

In the deploy stage, the application reaches its pivotal moment as teams roll it out to the production environment. Container orchestration tools, such as Kubernetes, assume control, scaling the application and updating it with minimal downtime. Teams have rollback mechanisms at the ready, allowing them to revert to previous versions if any issues emerge. At this point, the application becomes operational, serving its intended users and fulfilling its purpose in the digital ecosystem.

Maintain Stage

Post-deployment, the team transitions to actively maintaining the application. They employ a runtime solution to continuously monitor performance, log errors, and gather user feedback, all of which drive future enhancements, as well as container security and Kubernetes security.

As developers fine-tune the application, apply security patches, and roll out new features, the maintenance phase underscores the iterative nature of modern application development. Invariably, the product continues to evolve to meet user demands and integrate the latest technological advancements.

Benefits of Container Orchestration

Container orchestration offers a suite of benefits that align with the objectives of DevOps, ultimately enhancing operational efficiency and reducing overheads in cloud environments.

Enhances Scalability

Container orchestration platforms enable businesses to scale containerized applications in response to fluctuating demand — without human intervention or attempting to predict application load. The orchestrator’s bin packaging and autoscaling capabilities, coupled with public cloud infrastructure as code, dynamically allocate resources, ensuring optimal performance during peak loads.

Facilitates Resilience

By distributing container instances across multiple hosts, orchestration tools bolster application resilience. They detect failures and automatically reinitiate containers, minimizing downtime and maintaining service continuity.

Promotes Efficiency

Orchestration engines adjust resources to exactly what an application requires in various usage scenarios, preventing rampant overprovisioning or requiring organizations to architect and plan for high water utilization. This efficiency reduces infrastructure costs and maximizes return on investment.

Simplifies Management

Container orchestrators provide a unified interface to manage clusters of containers, abstracting complex tasks and reducing the operational burden. Teams can deploy updates, monitor health, and enforce policies with minimal manual intervention.

Improves Security

Container orchestration enhances security by automating the deployment of patches and security updates. It enforces consistent security policies across the entire container fleet, reducing the risk of vulnerabilities.

Enables Portability

Orchestration ensures containerized applications remain agnostic to the underlying infrastructure, facilitating portability across different cloud environments and on-premises data centers.

Accelerates Deployment Cycles

By automating deployment processes, orchestration tools shorten the time from development to production, enabling rapid iteration and faster time to market for new features.

Supports DevOps Practices

Integrating with CI/CD pipelines and enhancing the agility of software development, container orchestration fosters collaboration between development and operations teams. Capabilities like health monitoring and self-healing enable teams to perform less system support and troubleshooting, optimizing DevOps productivity.

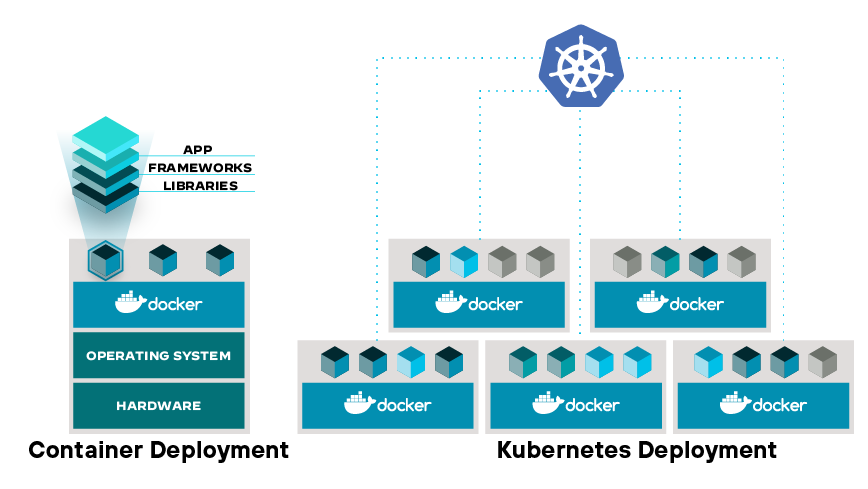

The Container Ecosystem

Quite simply, the container ecosystem represents a significant shift in application development and deployment. Encompassing a range of components — from runtime engines to orchestration platforms, registries, and security tools — it offers enterprises the all-important efficiency today’s fast-paced digital landscape demands.

Of course, at the heart of the ecosystem lies the synergy between the container engine and the orchestration engine. Together, these technologies guide containerized applications through the intricate stages of their lifecycle.

The container engine creates and packages individual containers, while the orchestrator engine manages and orchestrates multiple containers across a distributed infrastructure.

During development, the container engine facilitates rapid prototyping and testing, allowing developers to iterate quickly and efficiently. As the application matures, the orchestrator transitions it into production, providing a robust and scalable foundation for handling real-world workloads.

Figure 4: Docker and Kubernetes representing the dynamics of the container and orchestration engines

Strategic Implications for Business Leaders

Agility and Speed in Software Deployment

The container ecosystem accelerates the deployment of applications. By encapsulating applications in containers, organizations can swiftly move from development to production, irrespective of the underlying environment. This agility is crucial for organizations that need to rapidly adapt to market changes or user demands.

Enhanced Efficiency and Resource Optimization

Offering an alternative to traditional virtual machines, containers share the underlying OS kernel and consume fewer resources. This efficiency translates into reduced operational costs and improved utilization of computing resources, a key advantage for enterprises managing large-scale applications.

Scalability and Flexibility

Vital for digital enterprises experiencing fluctuating demand, orchestrators within the container ecosystem enable businesses to scale their applications without compromising performance. The container ecosystem as a whole refines previous capacities for scaling and resource availability.

Consistency and Portability Across Environments

The container ecosystem ensures consistency and portability. Applications packaged in containers can run uniformly and reliably across different computing environments, from on-premises data centers to public clouds.

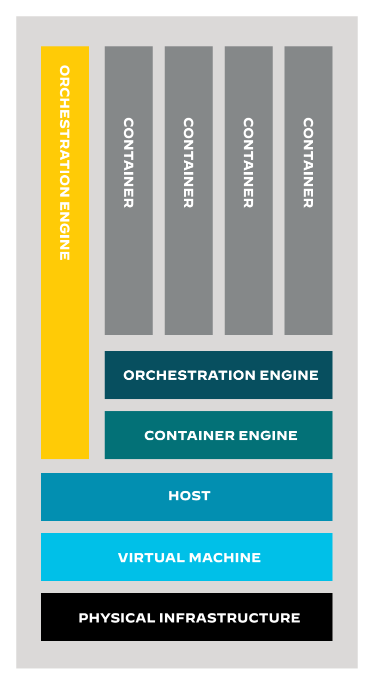

Figure 5: Anatomy of hosted container environment

Preparing for a Container-Driven Future

The future points to a digital world where most, if not all, applications run on containers. For executives, understanding the synergy behind the container ecosystem provides a strategic advantage. Armed with an informed point of view can enable you to anticipate and effectively meet the evolving demands of modern software development — and with optimal ROI.